Haithe: A Decentralized AI Marketplace Ecosystem

Team Details

-

Spandan Barve - (@marsian83)

-

Riya Jain - (@jriyyya)

-

Kartikay Tiwari - (@ishtails)

Relevant Links

-

Try out Haithe here: https://haithe.hetairoi.xyz

-

GitHub Repository: https://github.com/Haithedotai/core

-

Watch Tutorial: https://youtu.be/2HCJYMXJGDk?si=KHq6MzZLolLXOHuA

-

Pitch Deck: Haithe - Pitch Deck

-

X Account: https://x.com/haithedotai

-

Haithe’s official bot: Telegram: Launch @haithebot

-

Telegram Community: Telegram: View @haitheai

The Vision

Haithe is the world’s first fully decentralized AI marketplace ecosystem that combines the power of the Metis L2 blockchain with advanced AI orchestration to create a trustless, composable, and monetizable platform for AI development and deployment.

The Problem

The current AI development landscape suffers from critical limitations:

-

Centralization: AI platforms are controlled by big tech companies, limiting innovation and access.

-

Data Privacy: Developers must surrender sensitive data to platforms they don’t control.

-

Monetization Barriers: No secure way to monetize AI components (prompts, tools, knowledge) independently.

-

Complex Integration: Orchestrating AI agents across multiple services remains technically challenging.

-

Lack of Composability: AI tools and models exist in silos without seamless interoperability.

-

Trust Issues: No verifiable way to ensure AI tools work as intended or prove usage for billing.

The Solution: Haithe Platform

Haithe is a comprehensive decentralized AI platform that enables anyone to build, deploy, monetize, and collaborate on AI agents in a fully trustless ecosystem running on Metis.

Core Value Propositions

Autonomous AI Agent Management

-

Create and deploy AI agents with configurable capabilities

-

Multi-model support (GPT, Gemini, DeepSeek, Moonshot, Custom)

-

Memory and search capabilities with granular control

-

OpenAI-compatible API for seamless integration

Multi-Tenant Organization System

-

Role-based access control (Owner, Admin, Developer, Viewer)

-

Financial management with USDT-based payments

-

Team collaboration with granular permissions

-

Cross-organization resource sharing

Decentralized Marketplace Economy

-

Buy/sell AI components: knowledge bases, prompt sets, tools

-

Creator economy with NFT-based identity and revenue tracking

-

Cryptographically secured product licensing

-

Verifiable product authenticity and usage

Composable AI Tools

-

RPC Tools: Custom API integrations with any external service

-

Knowledge Bases: Text, HTML, PDF, CSV, URL content integration

-

Prompt Sets: Reusable prompt collections

-

MCP Tools: Model Context Protocol compatibility

-

Custom Tools: Rust, JavaScript, Python runtime support

Transparent Financial System

-

USDT-based pricing and payments

-

Real-time cost tracking per AI call

-

Automated creator revenue distribution

-

Built-in faucet system for testing

On-Chain Security & Authentication

-

Wallet-based authentication with signature verification

-

JWT session management with API key support

-

Blockchain-secured transactions and ownership

-

No centralized data storage risks

How It Works

1. Organization-Centric Architecture

Users create Organizations (multi-tenant workspaces) where they can:

-

Add team members with specific roles and permissions

-

Manage USDT balance and expenditure tracking

-

Enable AI models and marketplace products

-

Configure organizational settings and policies

2. AI Agent Creation & Deployment

Within organizations, users create Projects/Agents that:

-

Connect to multiple AI providers (GPT, Gemini, DeepSeek, etc.)

-

Integrate marketplace products (knowledge bases, tools, prompts)

-

Configure memory retention and web search capabilities

-

Provide OpenAI-compatible API endpoints for external integration

3. Marketplace Ecosystem

Creators can register and publish:

-

Knowledge Bases: Encrypted content (text, PDFs, URLs, CSVs)

-

Prompt Sets: Curated prompt collections for specific use cases

-

RPC Tools: Custom API integrations with external services

-

Custom Tools: Code-based tools in Rust, JavaScript, Python

-

All products are priced per-call and generate revenue for creators.

4. Real-Time AI Interactions

Users interact with AI agents through:

-

Web-based chat interface with conversation management

-

RESTful API with OpenAI compatibility

-

Telegram and Discord bot integrations

-

Real-time model switching and parameter adjustment

5. Blockchain Financial Rails

All financial operations are secured by smart contracts on the Metis Hyperion Testnet:

-

USDT payments for AI calls and marketplace purchases

-

Automated revenue distribution to creators

-

Transparent cost tracking and billing

-

Smart contract-enforced access controls

Technical Architecture

Frontend (React & On-Chain Integration)

-

Framework: React 19 with TypeScript, Bun build system

-

On-Chain Interaction: Privy authentication, Wagmi for Metis L2 interaction

-

State Management: Zustand + TanStack Query for optimal performance

-

UI/UX: Modern design system with Tailwind CSS and Radix UI

-

Real-Time: WebSocket connections for live chat experiences

Backend (Rust + AI Orchestration)

-

Framework: Haithe is built upon the Alith agentic framework by Lazai Network, utilizing an Actix Web 4.9.0 with async-first architecture.

-

Database: SQLite with SQLx for high-performance queries

-

Authentication: JWT tokens with wallet signature verification

-

AI Integration: Custom orchestration layer supporting multiple providers

-

Blockchain: Ethers.rs for smart contract interaction with the Metis network

-

API: OpenAI-compatible endpoints plus native Haithe APIs

Smart Contract Architecture (Solidity)

-

HaitheOrchestrator: Central coordination and platform management

-

HaitheOrganization: Multi-tenant workspace with financial controls

-

HaitheProduct: Marketplace product representation with creator attribution

-

HaitheCreatorIdentity: NFT-based creator identity and revenue management

-

tUSDT: Test token for payments and financial operations

AI Model Integration

-

Supported Providers: Google Gemini, OpenAI GPT, DeepSeek, Moonshot, Custom models

-

Model Orchestration: Dynamic model resolution and configuration

-

Tool Integration: RPC tools, knowledge bases, search capabilities

-

Memory Management: Conversation history with configurable retention

Blockchain Networks

-

Development: Hardhat local network with full testing suite

-

Staging: The platform is deployed on Hyperion Testnet by Metis Layer2 (L2) Blockchain for staging deployments.

-

Production: Configurable for mainnet deployment on Metis.

Alith Integration:

The entire core of the agent building platform is written using Alith in rust.

Our platform uses Alith for agent creation, knowledge management, encryption and decryption while communicating with the data availability layer.

Our platform also has a marketplace where people can sell custom knwoledge / tools / mcp access and RPC tools and all of this is also managed with Alith.

We also use the request_reward and verification implementations for requesting a DAT reward for our creators.

Unique Haithe Features

-

Granular Permission System: Organization-level and project-level role management, API key generation with scoped permissions, and member invitation workflows.

-

Dynamic Product Marketplace: Real-time product enablement, category-based search, creator profiles with earnings analytics, and product versioning.

-

Multi-Provider AI Orchestration: Seamless switching between AI providers, cost optimization across models, and fallback mechanisms.

-

Financial Transparency: Real-time cost tracking, detailed expenditure reports, automated creator revenue distribution, and a built-in faucet.

-

Developer-First API Design: OpenAI-compatible endpoints for easy migration, a comprehensive REST API, and WebSocket support.

-

Extensible Tool System: RPC tool creation, MCP (Model Context Protocol) compatibility, custom code execution in sandboxed environments, and a plugin architecture.

Current Implementation Status

Fully Implemented

-

Complete on-chain authentication and wallet integration on the Metis network

-

Organization and project management systems

-

AI model orchestration with multiple providers

-

Marketplace creation and product publishing

-

Real-time chat interface with AI agents

-

Smart contract deployment and interaction on Hyperion Testnet

-

Financial management with USDT payments

-

API key generation and management

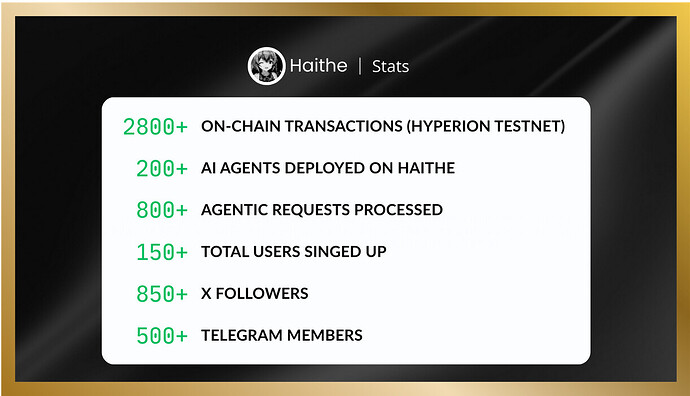

Our Stats

We launched Haithe on the Hyperion Testnet followed by our spotlight campaign to validate our core assumptions. The initial response has exceeded our expectations. Here are some stats:

First two weeks response

In Active Development

-

Advanced analytics and usage insights

-

Visual workflow builder for complex AI automations

-

Enhanced creator tools and revenue analytics

-

Mobile applications for iOS and Android

-

Additional AI provider integrations

Planned Features

-

Trusted Execution Environment (TEE) integration

-

Advanced auditor verification system

-

Cross-chain compatibility

-

Advanced workflow automation

-

Enterprise-grade security features

Competitive Advantages

-

True Decentralization: Unlike centralized AI platforms, Haithe leverages the Metis L2 blockchain for true decentralization with no single point of failure.

-

Composable Architecture: Mix and match AI models, tools, and knowledge bases from different creators.

-

Creator Economy: First platform to enable monetization of AI components with transparent, on-chain revenue sharing.

-

Developer Experience: OpenAI-compatible APIs make migration effortless.

-

Financial Innovation: USDT-based payments with automated creator revenue distribution via smart contracts.

-

Security First: Wallet-based authentication with cryptographic verification.

-

Multi-Tenant Design: Organizations can manage multiple projects and teams efficiently.

Target Market & Use Cases

Primary Users

-

AI Developers: Building and deploying AI applications

-

Content Creators: Monetizing AI prompts, knowledge, and tools

-

Enterprises: Managing AI workflows with team collaboration

-

Researchers: Accessing and contributing to AI knowledge bases

Use Cases

-

SaaS Applications: AI-powered customer service, content generation

-

Research Tools: Academic research with specialized knowledge bases

-

Business Automation: Workflow automation with AI decision making

-

Content Creation: AI-assisted writing, design, and media production

-

Educational Platforms: AI tutors with subject-specific knowledge

Market Opportunity

The global AI market is projected to reach $1.8 trillion by 2030. Haithe addresses multiple key growth segments simultaneously:

-

AI development platforms ($50B+ market)

-

Creator economy platforms ($104B+ market)

-

Enterprise AI solutions ($150B+ market)

-

Decentralized Layer 2 infrastructure ($67B+ market)

Innovation Highlights

Technical Innovation

-

First platform to combine AI orchestration with a decentralized marketplace on the Metis L2.

-

Novel multi-tenant organization system for AI resource management.

-

Pioneering RPC tool integration for unlimited AI capabilities.

-

Revolutionary creator economy for AI component monetization.

User Experience Innovation

-

Seamless on-chain onboarding without sacrificing usability.

-

Real-time collaboration on AI projects with role-based permissions.

-

Visual marketplace for discovering and integrating AI components.

-

OpenAI-compatible APIs for zero-friction developer adoption.

Business Model Innovation

-

Per-call pricing model for AI services with transparent costs.

-

Automated creator revenue sharing with smart contracts.

-

Multi-token economy supporting both platform and creator incentives.

-

Freemium model with a built-in faucet for user onboarding.

Conclusion

Haithe represents the future of AI development: decentralized, composable, monetizable, and accessible to everyone. By breaking down the silos of traditional AI and empowering a global community of creators, we are building more than just a platform; we are fostering an ecosystem. By harnessing the security and efficiency of the Metis L2 blockchain, Haithe provides the essential infrastructure for the next generation of intelligent, autonomous, and transparent AI applications. We invite you to join us in building this open and collaborative future.